HPC Summit Week 2018 Session: “Computing Patterns for High Performance Multiscale Computing”

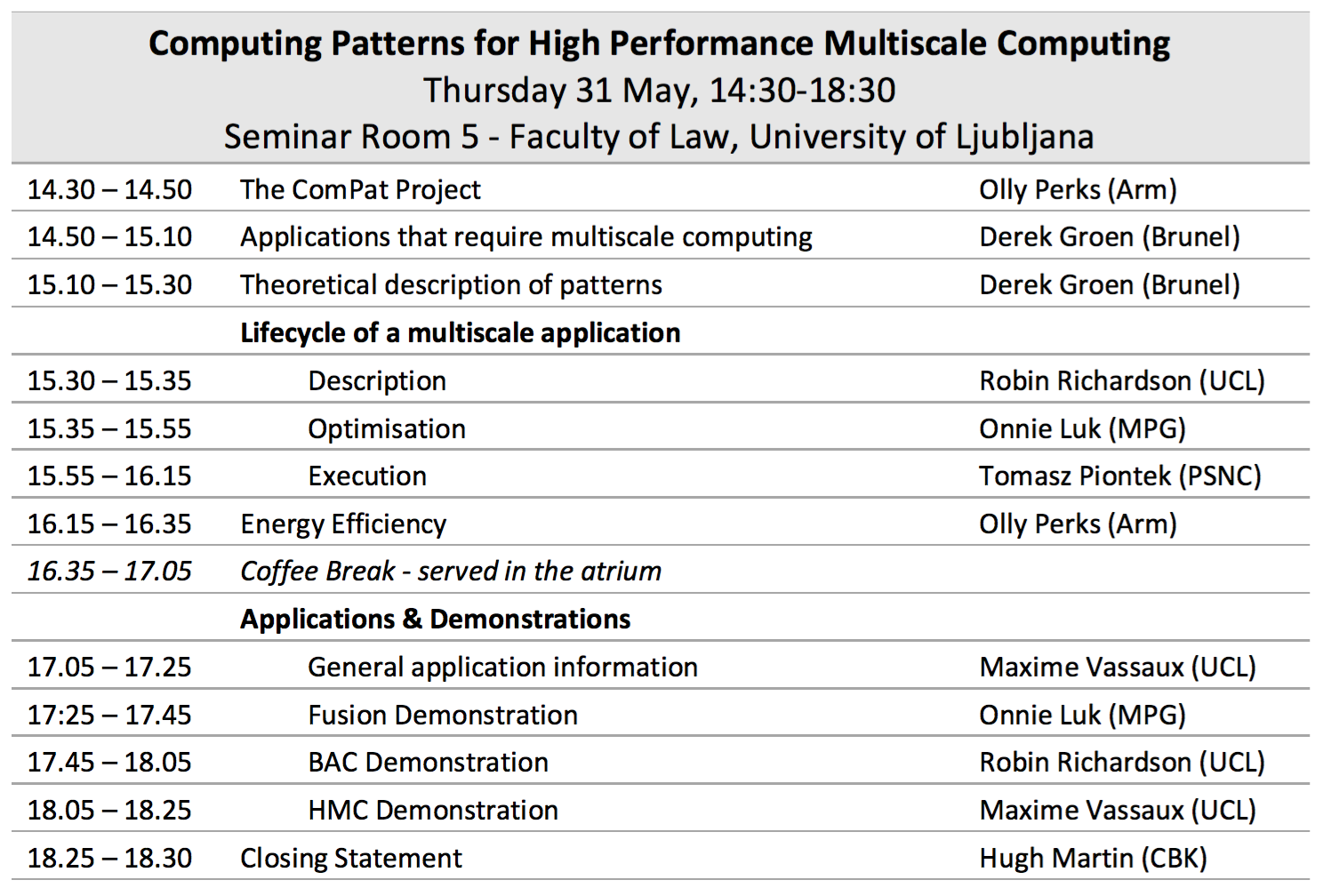

The ComPat Project is holding a 4 hour session of talks at the European HPC Summit Week 2018 on “Computing Patterns for High Performance Multiscale Computing”.

Abstract:

Multiscale phenomena are ubiquitous and they are the key to understanding the complexity of our world. Despite the significant progress achieved through computer simulations over the last decades, we are still limited in our capability to accurately and reliably simulate hierarchies of interacting multiscale physical processes that span a wide range of time and length scales, thus quickly reaching the limits of contemporary high performance computing at the tera and petascale. Exascale supercomputers promise to lift this limitation, in this session we discuss the various aspects around the development of multiscale computing algorithms capable of producing high-fidelity scientific results and scalable to exascale computing systems.

Date: 31 May 2018

Time: 14:30 – 19:00

Location: Seminar Room 5, Faculty of Law, University of Ljubljana, Slovenia

The session will feature talks and demonstrations from Dr Derek Groen, Dr Onnie Luk, Dr Tomasz Piontek, Dr Olly Perks, Dr Robin Richardson, and Dr Maxime Vassaux

Registration:

To register for the HPC Summit Week, which will allow you to attend this ComPat session, please follow this link.

Talk Abstracts

1. The ComPat Project – Olly Perks

Multiscale phenomena are ubiquitous and they are the key to understanding the complexity of our world. Despite the significant progress achieved through computer simulations over the last decades, we are still limited in our capability to accurately and reliably simulate hierarchies of interacting multiscale physical processes that span a wide range of time and length scales, thus quickly reaching the limits of contemporary high performance computing at the tera and petascale. This talk will cover the role of the ComPat project in helping develop tools and methodologies to support multiscale simulations for exascale.

2. Applications that require multiscale computing – Derek Groen

In this talk I will reflect on the use of multiscale computing by researchers to solve their scientific problems. I will reflect on the range of applications where multiscale computing can be effectively applied, and will highlight several examples where such adoption has been particularly successful in the past. I will also reflect on the pros and cons of adopting coupling multiple single-scale models, versus monolithically implementing a new multiscale simulation code, and introduce the common building blocks that constitute a production multiscale application.

3. Theoretical description of patterns – Derek Groen

We propose multiscale computing patterns as a generic vehicle to realise load balanced, fault tolerant and energy aware high performance multiscale computing. Multiscale computing patterns should lead to a separation of concerns, whereby application developers can compose multiscale models and execute multiscale simulations, while pattern software realises optimized, fault tolerant and energy aware multiscale computing. In this talk I will introduce three multiscale computing patterns, present an example of each pattern, and discuss our vision of how this may shape multiscale computing in the exascale era.

4. Lifecycle of a multiscale application

4a. Description – Robin Richardson

The multiscale computing patterns software consists of three parts, namely the submodels Description Part (the user input of the multiscale computing patterns software), the Optimisation Part (performance oriented services and tools) and the Services Part (underlying resource allocation service). The Description Part uses as input the task graph of the multiscale model expressed in textual form, submodel definitions, and the simulation input and configuration. This information includes any restrictions in relation to resources the single scale simulations can use. The Description Part includes a translation step to convert them from their original formats into a format suitable for the patterns performance services, which is combined with any motifs identified in the task graphs. This forms the input to the Optimisation Part of the multiscale computing patterns software.

4b. Optimisation – Onnie Luk

In this talk, I will emphasize on how the optimisation part of the ComPat’s Multiscale Computing Patterns software stack takes profiles collected from the submodels in a multiscale application, and what metrics it uses to produce optimal execution scenarios based on the underlying computing pattern.

4c. Execution – Tomasz Piontek

The aim of this talk is to present challenges related to execution of a multiscale applications on a real, distributed, production HPC environment consisting of single or multiple Tier-0/1 computing resources. The talk will be devoted to a set of QCG middleware services developed or adapted in ComPat project allowing efficient execution of multiscale applications utilizing the proposed in the project concept of High Performance Multiscale Computing Patterns with particular emphasis on optimisation of energy utilization.

5. Energy Efficiency – Olly Perks

Energy consumption is a key consideration for HPC, especially at exascale. The framework developed as part of the ComPat project presents a unique view on energy consumption and performance, and the ability to compare across multiple HPC systems. By collecting sufficient data, we are able to predict anticipated energy usage – and target platforms so as to minimise it – for each component of a multiscale model. This talk will present the data collection and assimilation framework, in addition to the feedback loop for utilising this data. We will also present case studies analysing example data.

6. Applications & Demonstrations

6a. General application information – Maxime Vassaux

As part of the ComPat project, we have been enabling application software to interface with high-level tools and middleware services of the ComPat technology stack, with the aim of increasing the efficiency of these simulations. Each of the applications in the demo session illustrate the different computing patterns identified in the project (Extreme Scaling, Replica Computing and Hierarchal Multiscale Computing) with domains in biochemistry, nuclear fusion and materials science respectively. We briefly describe the scientific area of each application and how the ComPat tools assist in the running of them.

6b. Fusion Demonstration – Onnie Luk

Understanding the dependency between micro-scale plasma turbulence and the macro-scale transport is essential in predicting the performance of fusion plasma devices. Currently this dependency is not well understood due to the highly disparate spatial and temporal scales involved. The ComPat projects offers a framework that couples existing and well-tested single-scale models to study such multiscale problem. In this session I will give a brief introduction to the implementation of ComPat framework to the fusion application in the form of extreme scaling pattern. Then a live-demonstration follows to show how the ComPat Multiscale Computing Patterns software is applied.

6c. BAC Demonstration – Robin Richardson

In this demo, we wil illustrate how the ComPat technology stack can facilitate replica computing, where numerous instances of a single job are run, allowing the user to collect statistics and investigate the inherent variations in results from identical simulations. In this demo, we will illustrate replica computing though the Binding Affinity Calculator (BAC), an automated molecular simulation based free energy calculation workflow tool. The underlying computational method is based on classical molecular dynamics (MD). These MD simulations are coupled to the molecular mechanics Poisson−Boltzmann surface area (MMPBSA) method to calculate the binding free energies. For purposes of reliability, ensembles of replica MD calculations are performed for each method, and we have found that ca 25 of these are required per MD simulation in order to guarantee reproducibility of predictions. This is due to the intrinsic sensitivity of MD to the initial conditions, since the dynamics is chaotic. We shall illustrate in this demo of BAC how ComPat tools allows the user to run ensembles of replicas, and, if required, distribute these replicas over multiple computing resources.

6d. HMC Demonstration – Maxime Vassaux

The “heterogeneous multiscale computing” pattern, as defined in the ComPat project, describes a workflow coupling between (in the simplest case) two models of different kinds (“global” and “local”). The first, global, model is generally a light-weight iterative process, in which at each iteration the global model calls an arbitrary number of instances of the local model to provide missing data. The second, local, model is in comparison more costly, hence producing an important load imbalance between the execution of the two models. We will demonstrate how to simply integrate the PilotJob Manager developed as part of the QCG Middleware toolkit by PSNC into an existing HMC workflow and how this can help in bypassing scheduling issues related to such a computing pattern. Mainly, through sacrificing one core of the global allocation, to coordinate the execution of a large amount of instances of the local model and thus avoiding substantial idle time induced by a priori, static allocation of resources.

Speaker Biographies

Dr Derek Groen is a Lecturer in Simulation and Modelling since 2015, and a 2014 Fellow of the Software Sustainability Institute. He specializes in multiscale simulation, high performance computing and distributed computing, and has published 25 peer-reviewed journal papers. Derek works on constructing multiscale models using the HemeLB bloodflow simulation environment, and won the ARCHER Early Career Impact Award in 2015 as a result. He also leads a small research group on agent-based multiscale modelling of irregular migration. Derek has previously developed multiscale modelling techniques for nanocomposites, an effort which resulted in the publication of a Feature Article in Advanced Materials (IF 18.96). Derek is lead developer of the FabSim toolkit, the MPWide communication library, and has collaborated in the development of MUSCLE 2 and the predecessor of AMUSE (MUSE). Derek has experience with a wide range of applications, including methods such as lattice-Boltzmann, stellar and cosmological N-body dynamics, molecular dynamics and agent-based modelling. He obtained his PhD from the University of Amsterdam in 2010, where he ran large cosmological simulations geographically distributed across up to four supercomputers. He has also worked on a variety of performance optimization projects; he was involved in the CRESTA project and has worked on extreme scaling of codes such as LB3D and HemeLB.

Dr Onnie Luk is a Ph.D. graduate from the University of California at Irvine, where she conducted her research on the role of convective cell in nonlinear interaction of kinetic Alfven waves. She also has experience in analyzing magnetometer data obtained by the Galileo spacecraft. Currently, she is a post doctoral researcher at the Max-Planck-Institut für Plasmaphysik. She is part of the ComPat project, in which she explores time bridging methods to connect turbulence and transport models in a component-based multiscale fusion plasma simulation.

Dr Oliver Perks is a Field Application Engineer at Arm, providing application porting and optimisation support to customers, through the use of the Arm HPC tools. Oliver obtained his PhD from Warwick University, in profiling of HPC applications, and subsequently moved into industry to practice performance optimisation for large scale production workloads. Having joined Allinea, Oliver began working on a number of H2020 projects, including ComPat, and continues that involvement as a representative of Arm.

Dr Maxime Vassaux (mvassaux.github.io) is a Post Doctoral Research Associate (PDRA) at the Centre for Computational Science, University College London (UCL). He holds a Masters in Structural Engineering (Université Pierre et Marie Curie, 2011), and a PhD in Computational Mechanics (École Normale Supérieure, 2015). His broad research background has spanned the formulation of theoretical constitutive equations for concrete and quasi-brittle materials, and their calibration using a multiscale approach based on lattice-particle methods. Using similar methods he then studied the mechanical behaviour of adherent and migrating mesenchymal stem cells. In order to extend the variety of scales he could target using his computational skills in then focused on implementing an heterogeneous multiscale method based on the finite element method and molecular dynamics to analyse non-equilibrium thermodynamics of complex materials. He now applies such methodology to understand the emergence of fracture related properties in polymer nanocomposites. He has contributed to several research projects, including ComPat (EU) and SinusSurf (FR).

Tomasz Piontek graduated from Poznań University of Technology in Computer Science. He is a member of Applications Department at Poznań Supercomputing and Networking Center, Poland and head of the Large Scale Applications and Services Department. He has been involved in many EU-funded R&D projects in the areas of distributed and parallel computing, including QosCosGrid and MAPPER. His research activities are focused on the modelling of advanced applications for modern hybrid HPC architectures, and online services scalability and availability.

Dr Robin Richardson is a Computational Physicist at the Centre for Computational Science, University College London (UCL). His research background has spanned the parameterisation of force fields for Molecular Dynamics (MD) simulations of Shape Memory Alloys, Finite Element (FE) simulations of molecular motors in flagella, lattice-Boltzmann simulations of cerebral blood flow in stroke patients, and multiscale materials prediction through coupling of MD with FE. He has experience of developing scalable codes across multiple supercomputing platforms. He contributes to several on-going research projects, including COMPAT and CompBioMed (EU) and CERREBRAL (Qatar National Research Fund).